Three years ago this weekend, the iPad was released for sale in the USA. In Australia, it came in July, with the 3G configuration.

I went back to what I wrote about the iPad after its announcement at an Apple keynote in January 2010, and then in the days before its release. It makes for edifying reading given the tech punditry who got it so, so wrong, as evidenced by Asymco’s Horace Dediu here.

How I know the iPad will be a success – unusual sources of evidence: potential users

Snippet:

“The second thing: There is one game displayed where what looks like a jigsaw where pieces of yellow cheese are assembled into a one piece, about 21secs into the video. Here’s a stillshot:

When I first saw this clip, I was reminded of a widely-used IQ test, known as the WISC (Wechsler Intelligence Scale for Children), which contains a series of tests, some timed, which measures both verbal and non-verbal aspects of intelligence.

The equipment comes in a box and is several thousand dollars. Several of the tests use jigsaw-like elements asking the child to assemble the elements into a gestalt. At first, the child is told what the final assembly looks like. More challenging elements see the child merely told to assemble the pieces without knowledge what it is.

There are also small individual squares (3″ x 3″) containing elements of an illustrated story for which there is one best way to place them in order. The child starts with just two pictures and it’s very easy to place them in linear order of occurrence. The stories get more complicated and take longer as the child progresses.

As I was showing the Redfish video a second time, I asked the audience to consider how the WISC could be almost entirely performed on the iPad, together with a stopwatch function which could then automatically time and enter scores without the manual being needed for norming the results. There is one block test that requires small blocks to be laid out according to an illustration that may not be doable on the iPad, but if imagination is allowed to reign, the next generation of children could be tested with a WISC specifically designed to be performed on the iPad with a new block test normed for a new generation. Readers should bear in mind that psychologists don’t simply gathers scores, but also look keenly at how the child goes about the task, how he or she deals with frustration or failure or success, things that aren’t normed but important clinical indicators nonetheless.”

But all this is a digression for certain interested readers, away from the point I wish to make in that this was a natural small experiment into the appeal of the iPad for certain groups: one with concerns about adapting new technologies who understood my enthusiasm for the iPad and where I think it fits in professionally; and the second of course were the young boys who ignored their DVD which had so occupied them to stare gobsmacked at the iPad Redfish video. You could almost see them aching to get their hands on one, and play the same game, one of many Redfish will be releasing for educational purposes.

I have every confidence their excitement is the tip of the iceberg, and naysayers will be looking very glum in a year’s time for their shallow prognostications.”

UPDATE: The huge publishing house, Pearson, with whom Apple has done deals for textbooks on the iPad, (see here), has the rights to the WISC and the Adult version, the WAIS. I am now beta testing these tests which utilise two iPad 4’s which through bluetooth allow the clinician to see what the test subject is doing. Pearson “got it” when others didn’t.

Thinking about the iPad in a professional psychology practice – in response to a fifteen year old’s dissing of it as useless

Snippet:

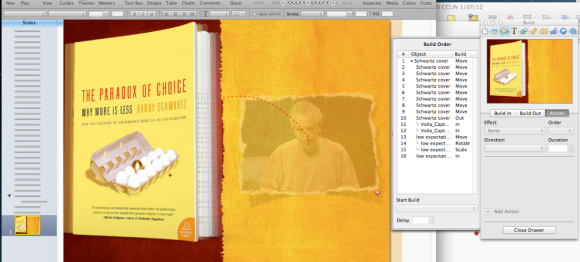

“So here are my thoughts, without yet getting my hands on it, as to how I might use the iPad in a professional psychology setting, as well as (as an addendum to be added to once I actually see how Keynote works) how to use it as a Presentation tool.

1. Intake: Patients waiting to meet me can fill in questionnaires or biographical information (much of it radio buttons or tick boxes) or using the built in or outrigger keyboard. In the future, a pend device for handwriting might become available.

2. Billing: As of now, many patients make direct payment using their internet banking or via PayPal if using credit cards. Just like iccpay.com, I imagine we’ll see similar instant credit card payment systems evolve for the iPad.

3. Patient database management, using an evolved form of Bento or a specific Numbers template which is easily transferred back to the Macbook Pro.

4. Showing educational movies, either on the iPad itself or via a USB or wireless connection to a TV or data projector.

5. Testing: I can see a number of specialist psychological testing outfits developing normed tests for use with children and adults on the iPad.

6. Distractor for children: Sometimes, a child in a session needs to be kept occupied when parents are the subject of interview, and the iPad with its games will be great for this. The last thing I want to give them is my Macbook Pro.

7. Information to read about their disorder or malady, which can then be printed out at will. Yes, it can be done on the Macbook Pro, but it’s always hooked up to monitors, backup drives and my iPhone and isn’t moveable during a session. Much easier simply to give a patient the iPad to read.

8. Make audio recording of the session. I record all sessions (patients remember about 10% of a sessions content) and from the iPad the AAC or mp3 file is emailed to them. Again, it can be done on the Macbook Pro using an external microphone like a Blue Snowball.

9. Specialised measuring tools, such as biofeedback devices like the emWave I now use to monitor heart rate variability, useful in stress management and arousal modulation. If patients get their own iPads with the software (possibly in development now), practise the techniques I’ve taught, and theit data can hopefully be transferred to my main database for comparisons and expose improvements over time.

10. A miniature whiteboard using Keynote to highlight ideas and demonstrate concepts.

These are just a few ideas thrown together without too much effort. Once the ball is rolling and the first of a new generation of apps of released, no doubt surprising us with their look, feel and innovation, the ball will start rolling and the penny dropping. For myself, I can see workshops ahead for using the iPad in professional health consulting, and hopefully hooking up with developers with a psychology interest to create new apps.”

Current Status: My accuracy record for all these predictions, before even getting my hands on the iPad:

100%

I am particularly proud of #9, the emWave heart rate monitoring device. After Macworld 2009, I visited the developers of this program in Santa Cruz, and implored them to develop for the iPhone, instead of their dinky portable device. They refused, citing exhorbitant development costs. I told them flat out they were wrong, the iPhone will surge in sales as more developers came on board, and if they didn’t develop, someone else would.

In March this year, the emWave for iPad and iPhone was released, known as Inner Balance (review coming soon). The US Navy, in an effort to increase mental toughness and reduce PTSD in its corps, is experimenting with the iPad and such a system.

#10 was achieved through another app I am a beta tester for, Doceri. It has now been taken up by UPenn for a 1000 seats its lecture theatres.

Blog entry for March 28, 2010:

While the 3 year old will yelp with delight when they discover the iPad’s games, the 80 year old will quietly say, “I get it. This is what computing’s about.”

Snippet:

“Seated at the same dinner table last Friday were students who entered the course after I had completed my studies, and whom I’ve met at other functions organised by this very social graduate group. One, Winston, works for a very large car manufacturing company whose world headquarters are in Detroit, and was in receipt of bailout money in recent months. The company has been part of the Australian manufacturing sector since the 1940s, and their vehicles remain very popular with Australians.

Somehow, the discussion moved to the iPad, perhaps after I had excused myself from the table to answer my iPhone, and Winston suggested on my return he was interested in getting an iPhone too. I suggested he wait a little while, perhaps June or July, when a new model might become available, and from there a discussion took place about the iPhone’s place in business now that Microsoft Exchange could work with it. It was a quick skip to speculation about the iPad.

Winston put me on the spot to pronounce why the iPad was a better choice than a netbook, which in Australia would be half the price and pack more features, such as a camera, “real” keyboard, iPhone tethering, and the full Microsoft Office suite.

My response was to suggest that the iPad should better be considered not as a computer in the common use of the term, i.e. a notebook or desktop device, but as a knowledge management tool in its own right, and rattled off the sort of apps it would inherit from the iPhone as well as those likely be designed to take advantage of its speed and screen size.

I suggested to Winston that the iPad would have limited initial appeal to computer wonks who wanted merely a smaller form factor for Windows-based computing. It would fail their needs. But I then suggested that there would be huge numbers of ordinary people with very limited knowledge of computer innards and workings – that is, the vast bulk of the Australian population – for whom the iPad would elicit the spontaneous remark:

So this is what computing should be!

No menu bars, no operating system to fiddle with, instant on and ready to use at the simple touch of one button, yet also have powerful business applications such as iWork and Bento and Evernote should this group of users work its way up the skill and learning curve.

When Winston said he had elderly parents who had never touched a computer but had expressed interest in what their use might bring to their lives, I asked him in all honesty which he would buy them: A $400 netbook running Windows Xp (then add the cost of Microsoft Office 2007) or a $650 iPad plus the $50 for iWork + Bento?

The picture of 75 year old mum and dad sitting on their couch wrestling with a netbook with its tiny keyboard and poor resolution screen was enough to observe Winston momentarily pause in his tracks to reconsider his options. Yes, for him, with his background in engineering, a netbook was a no-brainer. A good match for the problems he wished to solve.”

Current Status: Totally nailed it!

Where to go to find people using iPads this weekend? In all sorts of interesting places!

Snippet:

We’re just a few days away from the iPad falling into users’ hands, in time for Spring Break, Easter, and Passover.

So where might you go and find iPads if you weren’t lucky enough to order one for yourself? Well, as the video below shows, a whole variety of places, perhaps even at the White House Passover seder hosted by Barak and Steve himself!

Enjoy!